Reactive emotional responses to machines are a commonplace in our lives.

I get indignant on the Kroger checkout machines once they by no means register the merchandise weights proper. The system locks me out, and an attendant has to verify that I haven’t put something within the cart that I didn’t pay for. I’m incensed that my time is being wasted and that my ethical character is being questioned.

I begin to really feel hole and nearly sick after working numerous prompts by means of ChatGPT, as all the pieces it spits out is bullshit of probably the most boring variety. The content material in some way averages out the discourse in a manner that belies an absence of any authentic thought or concern about reality. I really feel disturbed at its capacity to sound true and bonafide, in a not dissimilar manner as I might with a con individual.

I’m delighted once I open the NYTimes app and click on on Spelling Bee. It’s a phenomenal sport with a superb consumer interface, although accessing the hints web page is tougher than it must be. I really feel achieved once I make it to Queen Bee, and I religiously play the sport daily. When requested in regards to the sport, my response is “Oh, I like Spelling Bee!”

I start to resent the variety of notifications that LinkedIn sends my manner and the lengthy, arduous course of it takes to regulate all of them to an inexpensive drip. The app makes use of no matter means it has accessible to say my time and a focus, even once I don’t wish to give it. I really feel manipulated and used.

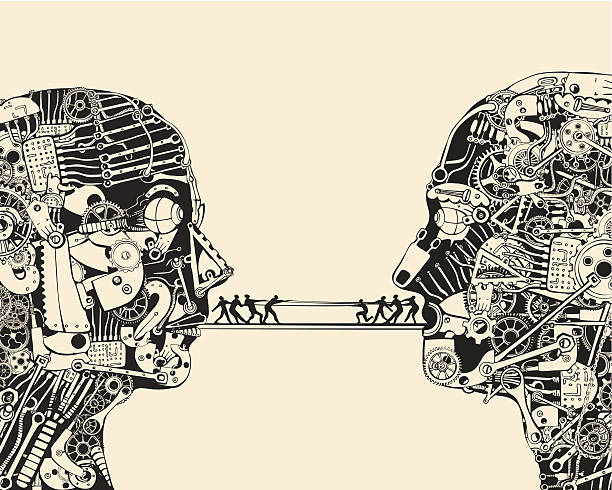

Put aside for now any circumstances of anger or delight at a machine for merely working or not working. As a substitute, I wish to focus in on a variety of circumstances through which plainly we’re having reactive emotional responses to options of machines related to the patterns of the values and causes they specific to us in our day-to-day interactions with them.

Are these reactive emotional responses correctly distinguishable from the reactive attitudes we really feel in the direction of different individuals? Are they responsibility-conferring in at the very least some sense? What does this imply in regards to the relative uniqueness of our interpersonal practices?

First, let’s decide who or what I’m responding to within the above 4 circumstances:

- I’m responding to the machines themselves.

- I’m responding to the individuals who designed the machines.

- I’m responding to some mixture of the above

I’m unsure there’s a single proper reply amongst these three. Relying on the expertise at play, it might be any of the above.

ChatGPT appears to current a case through which I’m responding to the expertise itself and never merely to the creators, particularly because the expertise shouldn’t be straight decided by the creators’ inputs. That is markedly completely different than the Kroger scanners, which extra straight replicate a company curiosity in loss prevention (even when it’s on the expense of buyer satisfaction).

The ultimate two examples appear to be a mix response. The NYTimes Spelling Bee has its personal inside magnificence, nevertheless it additionally displays good design on the a part of the sport and app designers. The LinkedIn mechanisms successfully manipulate me into spending extra time and vitality on the app, and it is a results of each the design itself and the inputs from the big variety of designers who developed it.

If it had been clear that our reactive feelings had been simply responding to the individuals who designed the machines, then we’d be firmly within the realm of reactive attitudes in the direction of individuals and our inquiry could be closed. Nevertheless, it’s unclear to me that our responses are solely to the human designers, and much more unclear to me that any feelings directed on the machines themselves are irrational.

In “Freedom and Resentment,” P.F. Strawson famously separates the participant stance from the target stance. The participant stance makes reactive attitudes equivalent to resentment, gratitude, anger, and love applicable, and it confers duty and full membership within the ethical neighborhood and human relationships.

The target stance makes the ethical reactive attitudes inappropriate, although feelings like worry or pity should still be felt. By means of the target stance, the individual or factor being evaluated is seen as one thing to be understood and managed, and no duty is conferred.

The place do my emotional responses to my day by day interactions with these machines fall on this taxonomy? Insofar as they reply to morally salient patterns of values and behaviors within the machines, they appear to transcend the target stance. However, as far as I don’t have full human relationships with these machines and don’t deal with them in all the identical methods as I might morally accountable human beings, they don’t match into the participant stance.

Regarding the sorts of feelings I really feel, it doesn’t really feel wholly inappropriate to make use of the language of loving a sure form of design or feeling indignant at an app or interactive machine. These attitudes should not the identical because the form of love or the form of anger felt in deeply concerned human relationships, however they’re emotional responses that transcend the repertoire of pity, worry, or a managerial stance.

Whereas ChatGPT and different human-designed interactive machines do specific sure patterns of valuing that talk good or unwell will or indifference (even when not one of the three is definitely there), they don’t have a capability to motive in the appropriate manner required for full participation in our ethical practices (at the very least not but). The machines can not reply to my morally-inflected feelings in the best way that human reasoners may.

This implies a 3rd form of stance someplace between the participant and the target stance, the place appraising responses and a few private involvement are applicable however the place no full duty or ethical standing is given. These sorts of attitudes is likely to be much like the morally appraising responses that we really feel in the direction of AI- or human-generated artwork or video video games.

The important thing distinction that guidelines out these modern machines from the participant realm and interpersonal human relationships is one thing alongside the traces of Strawson’s comment that “In case your perspective in the direction of somebody is wholly goal, then although it’s possible you’ll struggle him, you can’t quarrel with him, and although it’s possible you’ll speak to him, even negotiate with him, you can’t motive with him. You possibly can at most fake to quarrel, or to motive, with him.”

What this implies is that the participant attitudes are solely applicable in relationships through which the 2 events can motive with one another and mutually contest and form the norms and expectations that bind them. ChatGPT, regardless of its intensive skills, can not but comprehend ethical norms and specific its reasoned intention to set any expectations on itself or its customers. It may possibly play-act at such an trade, nevertheless it doesn’t have the understanding or consistency or embodied expertise to take part in human ethical relationships.

Till machines can themselves motive with us and form our collective ethical norms, our reactive emotional responses to them won’t fall into the participant attitudes and won’t confer duty.

Elizabeth Cargile Williams

Elizabeth Cargile Williams is a PhD candidate at Indiana College, Bloomington. Their analysis focuses on ethical duty and character, however in addition they have pursuits in social epistemology and feminist philosophy.