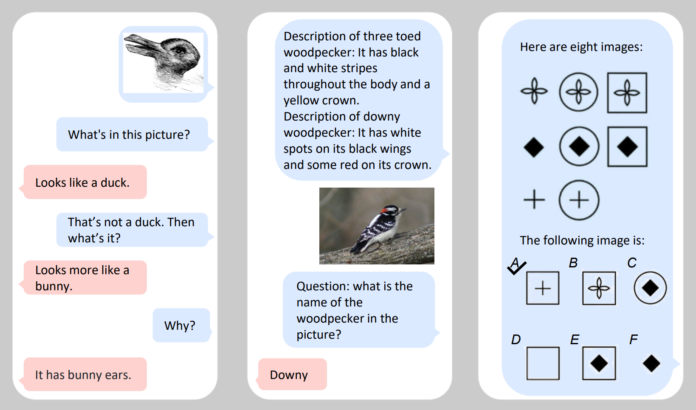

“What’s on this image?” “Appears to be like like a duck.” “That’s not a duck. Then what’s it?” “Appears to be like extra like a bunny.”

Earlier this week, Microsoft revealed Kosmos-1, a big language mannequin “able to perceiving multimodal enter, following directions, and performing in-context studying for not solely language duties but in addition multimodal duties.” Or as Ars Technica put it, it may “analyze photographs for content material, clear up visible puzzles, carry out visible textual content recognition, cross visible IQ assessments, and perceive pure language directions.”

Researchers at Microsoft supplied particulars in regards to the capabilities of Kosmos-1 in “Language Is Not All You Need: Aligning Perception with Language Models“. It’s spectacular. Right here’s a pattern of exchanges with Kosmos-1:

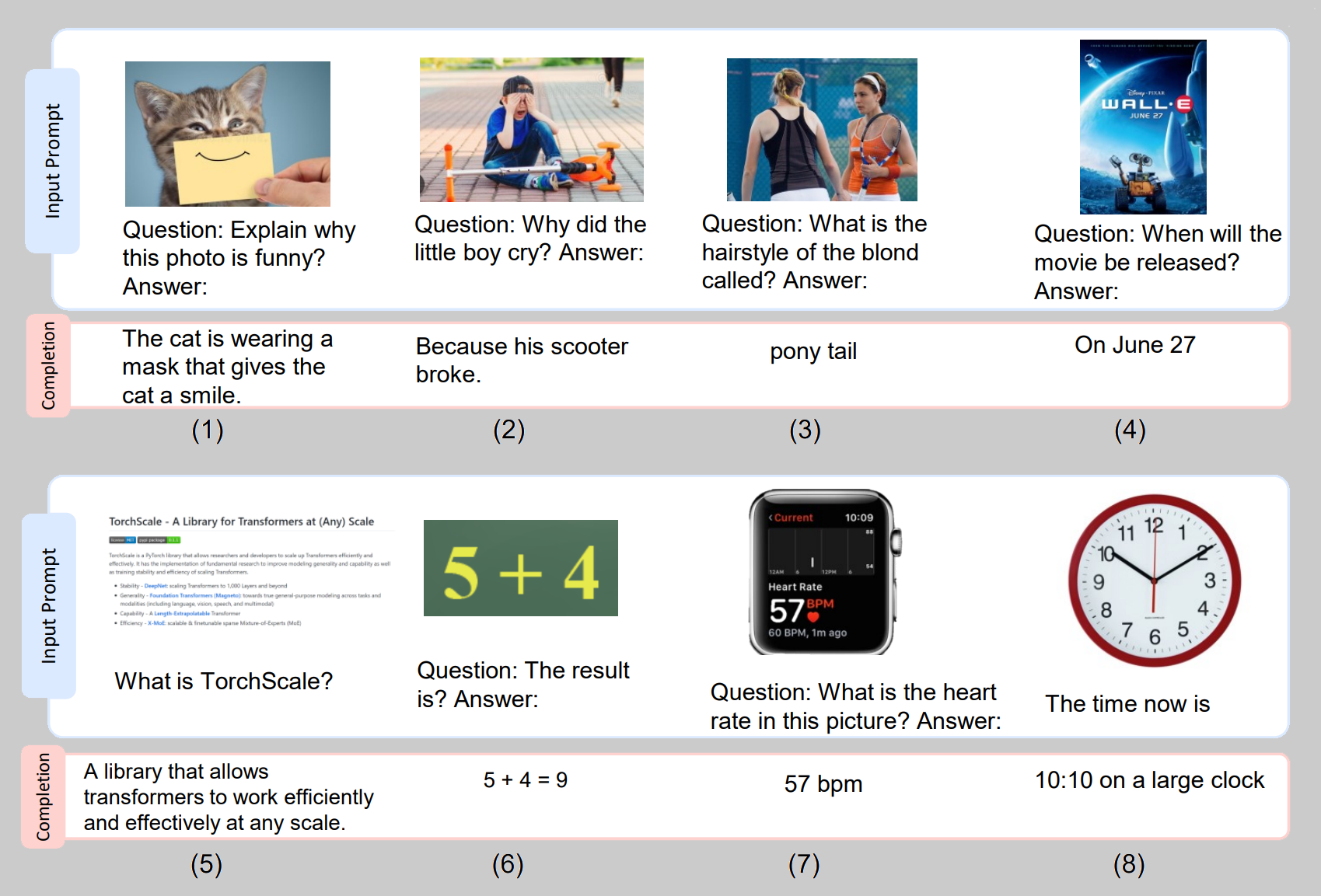

And listed here are some extra:

Chosen examples generated from KOSMOS-1. Blue packing containers are enter immediate and pink packing containers are KOSMOS-1 output. The examples embrace (1)-(2) visible rationalization, (3)-(4) visible query answering, (5) internet web page query answering, (6) simple arithmetic equation, and (7)-(8) quantity recognition.

The researchers write:

Correctly dealing with notion is a mandatory step towards synthetic common intelligence.

The aptitude of perceiving multimodal enter is vital to LLMs. First, multimodal notion permits LLMs to accumulate commonsense data past textual content descriptions. Second, aligning notion with LLMs opens the door to new duties, comparable to robotics, and doc intelligence. Third, the aptitude of notion unifies numerous APIs, as graphical consumer interfaces are probably the most pure and unified option to work together with. For instance, MLLMs can immediately learn the display or extract numbers from receipts. We practice the KOSMOS-1 fashions on web-scale multimodal corpora, which ensures that the mannequin robustly learns from various sources. We not solely use a large-scale textual content corpus but in addition mine high-quality image-caption pairs and arbitrarily interleaved picture and textual content paperwork from the net.

Their plans for additional growth of Kosmos-1 embrace scaling it up by way of mannequin measurement and integrating speech functionality into it. You’ll be able to learn extra about Kosmos-1 here.

Philosophers, I’ve stated it before and can say it once more: there’s a lot to work on right here. There are main philosophical questions not simply in regards to the applied sciences themselves (those in existence and those down the street), but in addition about their use, and about their results on our lives, relationships, societies, work, authorities, and so forth.

P.S. Only a reminder that fairly presumably the stupidest response to this know-how is to say one thing alongside the traces of, “it’s not acutely aware/considering/clever, so no huge deal.”