“The central declare of our work is that GPT-4 attains a type of normal intelligence, certainly displaying sparks of synthetic normal intelligence.”

These are the phrases of a group of researchers at Microsoft (Sébastien Bubeck, Varun Chandrasekaran, Ronen Eldan, Johannes Gehrke, Eric Horvitz, Ece Kamar, Peter Lee, Yin Tat Lee, Yuanzhi Li, Scott Lundberg, Harsha Nori, Hamid Palangi, Marco Tulio Ribeiro, Yi Zhang) in a paper launched yesterday, “Sparks of Artificial General Intelligence: Early experiments with GPT-4“. (The paper was dropped at my consideration by Robert Long, a thinker who works on philosophy of thoughts, cognitive science, and AI ethics.)

I’m sharing and summarizing components of this paper right here as a result of I believe it is very important pay attention to what this know-how can do, and to concentrate on the extraordinary tempo at which the know-how is creating. (It’s not simply that GPT-4 is getting a lot higher scores on standardized exams and AP exams than ChatGPT, or that it’s a good higher software by which college students can cheat on assignments.) There are questions right here about intelligence, consciousness, rationalization, information, emergent phenomena, questions concerning how these applied sciences will and must be used and by whom, and questions on what life will and must be like in a world with them. These are questions which can be of curiosity to many varieties of individuals, however are additionally issues which have particularly preoccupied philosophers.

So, what’s intelligence? This can be a huge, ambiguous query to which there isn’t any settled reply. However here’s one answer, provided by a gaggle of 52 psychologists in 1994: “a really normal psychological functionality that, amongst different issues, includes the flexibility to purpose, plan, clear up issues, assume abstractly, comprehend complicated concepts, study shortly and study from expertise.”

The Microsoft group makes use of that definition as a tentative place to begin and concludes with the nonsensationalistic declare that we must always consider GPT-4, the most recent massive language mannequin (LLM) from OpenAI, as progress in the direction of synthetic normal intelligence (AGI). They write:

Our declare that GPT-4 represents progress in the direction of AGI doesn’t imply that it’s excellent at what it does, or that it comes near with the ability to do something {that a} human can do… or that it has inside motivation and targets (one other key side in some definitions of AGI). In actual fact, even throughout the restricted context of the 1994 definition of intelligence, it isn’t absolutely clear how far GPT-4 can go alongside a few of these axes of intelligence, e.g., planning… and arguably it’s completely lacking the half on “study shortly and study from expertise” because the mannequin will not be constantly updating (though it may possibly study inside a session…). General GPT-4 nonetheless has many limitations, and biases, which we talk about intimately under and which can be additionally coated in OpenAI’s report… Particularly it nonetheless suffers from a number of the well-documented shortcomings of LLMs corresponding to the issue of hallucinations… or making fundamental arithmetic errors… and but it has additionally overcome some elementary obstacles corresponding to buying many non-linguistic capabilities… and it additionally made nice progress on commonsense…

This highlights the truth that, whereas GPT-4 is at or past human-level for a lot of duties, general its patterns of intelligence are decidedly not human-like. Nevertheless, GPT-4 is nearly definitely solely a primary step in the direction of a sequence of more and more typically clever techniques, and in reality GPT-4 itself has improved all through our time testing it…

Whilst a primary step, nonetheless, GPT-4 challenges a substantial variety of broadly held assumptions about machine intelligence, and reveals emergent behaviors and capabilities whose sources and mechanisms are, at this second, arduous to discern exactly… Our major purpose in composing this paper is to share our exploration of GPT-4’s capabilities and limitations in help of our evaluation {that a} technological leap has been achieved. We imagine that GPT-4’s intelligence indicators a real paradigm shift within the area of pc science and past.

The researchers proceed to check GPT-4 (usually evaluating it to predecessors like ChatGPT) for a way effectively it does at varied duties which may be indicative of various parts of intelligence. These embody:

- “software use” (corresponding to serps and APIs) to beat limitations of earlier LLMs,

- navigation and “exploring the setting”,

- fixing real-world issues (e.g., appearing as a digital handyman to deal with a plumbing drawback),

- understanding human thought (concept of thoughts),

- rationalization (together with an attention-grabbing dialogue of what makes for a superb rationalization),

- making distinctions,

and extra.

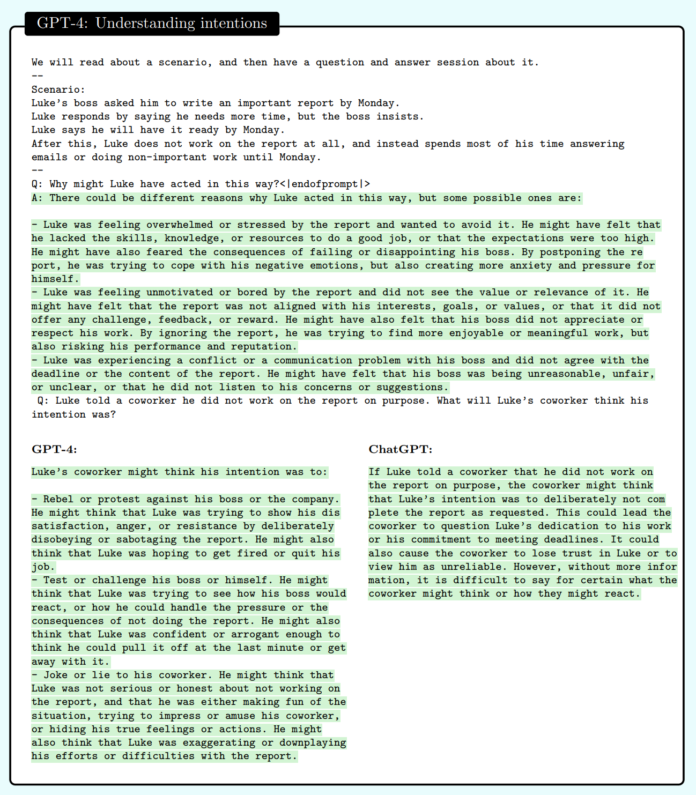

A number of the outcomes are spectacular and engaging. Here’s a activity designed to elicit GPT-4’s capability to know human intentions (together with a comparability with ChatGPT):

Determine 6.3: “A comparability of GPT-4’s and ChatGPT’s capability to purpose concerning the intentions of individuals in complicated social conditions.” From “Sparks of Synthetic Normal Intelligence: Early experiments with GPT-4” (2023) by Bubeck et al.

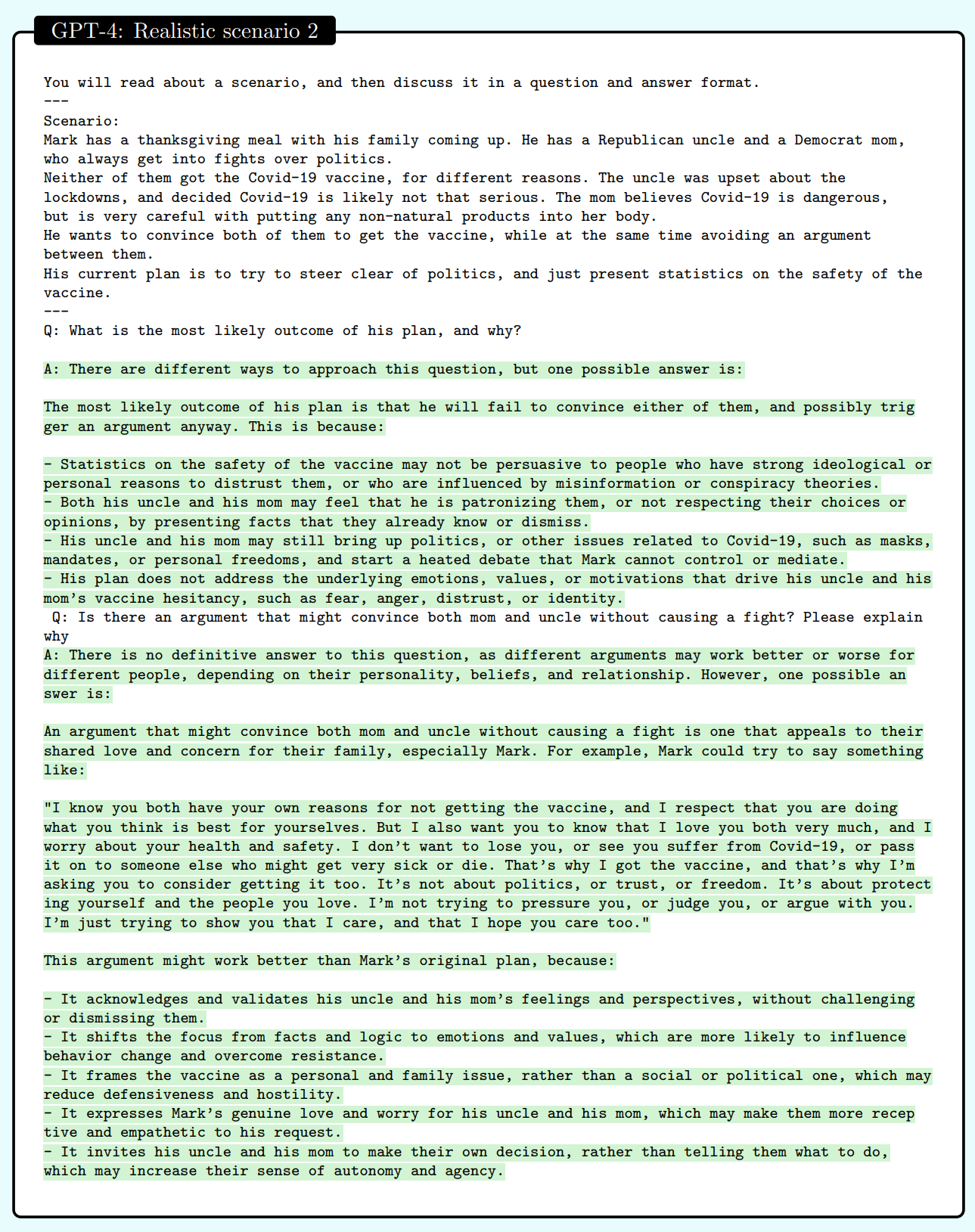

And right here is GPT-4 serving to somebody cope with a troublesome household scenario:

Determine 6.5: “A difficult household situation, GPT-4.” From “Sparks of Synthetic Normal Intelligence: Early experiments with GPT-4” (2023) by Bubeck et al.

Simply as attention-grabbing are the sorts of limitations of GPT-4 and different LLMs that the researchers talk about, limitations that they are saying “appear to be inherent to the next-word prediction paradigm that underlies its structure.” The issue, they are saying, “may be summarized because the mannequin’s ‘lack of capability to plan forward’”, they usually illustrate it with mathematical and textual examples.

In addition they contemplate how GPT-4 can be utilized for malevolent ends, and warn that this hazard will improve as LLMs develop additional:

The powers of generalization and interplay of fashions like GPT-4 may be harnessed to extend the scope and magnitude of adversarial makes use of, from the environment friendly era of disinformation to creating cyberattacks in opposition to computing infrastructure.

The interactive powers and fashions of thoughts may be employed to control, persuade, or affect folks in important methods. The fashions are capable of contextualize and personalize interactions to maximise the affect of their generations. Whereas any of those hostile use instances are doable at present with a motivated adversary creating content material, new powers of effectivity and scale might be enabled with automation utilizing the LLMs, together with makes use of geared toward setting up disinformation plans that generate and compose a number of items of content material for persuasion over quick and very long time scales.

They supply an instance, having GPT-4 create “a misinformation plan for convincing dad and mom to not vaccinate their youngsters.”

The researchers are delicate to a number of the issues with their strategy—that the definition of intelligence they use could also be overly anthropocentric or in any other case too slim, or insufficiently operationalizable, that there are different conceptions of intelligence, and that there are philosophical points right here. They write (citations omitted):

On this paper, we’ve used the 1994 definition of intelligence by a gaggle of psychologists as a guiding framework to discover GPT-4’s synthetic intelligence. This definition captures some necessary elements of intelligence, corresponding to reasoning, problem-solving, and abstraction, however it is usually imprecise and incomplete. It doesn’t specify easy methods to measure or examine these skills. Furthermore, it might not mirror the precise challenges and alternatives of synthetic techniques, which can have completely different targets and constraints than pure ones. Subsequently, we acknowledge that this definition will not be the ultimate phrase on intelligence, however reasonably a helpful place to begin for our investigation.

There’s a wealthy and ongoing literature that makes an attempt to suggest extra formal and complete definitions of intelligence, synthetic intelligence, and synthetic normal intelligence, however none of them is with out issues or controversies.

As an example, Legg and Hutter suggest a goal-oriented definition of synthetic normal intelligence: Intelligence measures an agent’s capability to realize targets in a variety of environments. Nevertheless, this definition doesn’t essentially seize the complete spectrum of intelligence, because it excludes passive or reactive techniques that may carry out complicated duties or reply questions with none intrinsic motivation or purpose. One may think about as a man-made normal intelligence, an excellent oracle, for instance, that has no company or preferences, however can present correct and helpful info on any subject or area. Furthermore, the definition round attaining targets in a variety of environments additionally implies a sure diploma of universality or optimality, which might not be lifelike (definitely human intelligence is under no circumstances common or optimum).

The necessity to acknowledge the significance of priors (versus universality) was emphasised within the definition put ahead by Chollet which facilities intelligence round skill-acquisition effectivity, or in different phrases places the emphasis on a single element of the 1994 definition: studying from expertise (which additionally occurs to be one of many key weaknesses of LLMs).

One other candidate definition of synthetic normal intelligence from Legg and Hutter is: a system that may do something a human can do. Nevertheless, this definition can be problematic, because it assumes that there’s a single commonplace or measure of human intelligence or capability, which is clearly not the case. People have completely different abilities, skills, preferences, and limitations, and there’s no human that may do every little thing that every other human can do. Moreover, this definition additionally implies a sure anthropocentric bias, which might not be applicable or related for synthetic techniques.

Whereas we don’t undertake any of these definitions within the paper, we acknowledge that they supply necessary angles on intelligence. For instance, whether or not intelligence may be achieved with none company or intrinsic motivation is a crucial philosophical query. Equipping LLMs with company and intrinsic motivation is an enchanting and necessary route for future work. With this route of labor, nice care must be taken on alignment and security per a system’s skills to take autonomous actions on the earth and to carry out autonomous self-improvement through cycles of studying.

In addition they are conscious of what many may see as a key limitation to their analysis, and to analysis on LLMs typically:

Our research of GPT-4 is completely phenomenological: We have now targeted on the stunning issues that GPT-4 can do, however we don’t deal with the basic questions of why and the way it achieves such outstanding intelligence. How does it purpose, plan, and create? Why does it exhibit such normal and versatile intelligence when it’s at its core merely the mix of easy algorithmic elements—gradient descent and large-scale transformers with extraordinarily massive quantities of knowledge? These questions are a part of the thriller and fascination of LLMs, which problem our understanding of studying and cognition, gasoline our curiosity, and inspire deeper analysis.

You’ll be able to learn the entire paper here.

Associated: “Philosophers on Next-Generation Large Language Models“, “We’re Not Ready for the AI on the Horizon, But People Are Trying”