“We are able to apply scientific rigor to the evaluation of AI consciousness, partially as a result of… we are able to determine pretty clear indicators related to main theories of consciousness, and present the best way to assess whether or not AI techniques fulfill them.”

Within the following visitor submit, Jonathan Simon (Montreal) and Robert Long (Heart for AI Security) summarize their latest interdisciplinary report, “Consciousness in Artificial Intelligence: Insights from the Science of Consciousness“.

The report was led by Patrick Butlin (Oxford) and Robert Long, along with 17 co-authors.[1]

Methods to Inform Whether or not an AI is Aware

by Jonathan Simon and Robert Lengthy

Might AI techniques ever be aware? Would possibly they already be? How would we all know? These are urgent questions within the philosophy of thoughts, they usually come up more and more within the public dialog as AI advances. You’ve most likely examine Blake Lemoine, or concerning the time Ilya Sutskever, the chief scientist at OpenAI, tweeted that AIs would possibly already be “barely aware”. The rise of AI techniques that may convincingly imitate human dialog will doubtless trigger many individuals to imagine that the techniques they work together with are aware, whether or not they’re or not. In the meantime, researchers are taking inspiration from capabilities related to consciousness in people in efforts to additional improve AI capabilities.

Simply to be clear, we aren’t speaking about basic intelligence, or ethical standing: we’re speaking about phenomenal consciousness—the query of whether or not there’s something it’s wish to be an occasion of the system in query. Fish is likely to be phenomenally aware, however they aren’t usually clever, and it’s debatable whether or not they have ethical standing. Similar right here: it’s potential that AI techniques shall be phenomenally aware earlier than they arrive at basic intelligence or ethical standing. Meaning synthetic consciousness is likely to be upon us quickly, even when synthetic basic intelligence (AGI) is additional off. And consciousness would possibly have one thing to do with ethical standing. So there are questions right here that needs to be addressed sooner slightly than later.

AI consciousness is thorny, between the hard problem, persistent lack of consensus about the neural basis of consciousness, and unclarity over what subsequent yr’s AI fashions will seem like. If certainty is your recreation, you’d have to resolve these issues first, so: recreation over.

For the report, we set a goal decrease than certainty. As an alternative we got down to discover issues that we may be fairly assured about, on the idea of a minimal variety of working assumptions. We settled on three working assumptions. First, we undertake computational functionalism, the declare that the factor our brains (and our bodies) do to make us aware is a computational factor—in any other case, there isn’t a lot level in asking about AI consciousness.

Second, we assume that neuroscientific theories are heading in the right direction generally, that means that among the obligatory situations for consciousness that a few of these theories determine actually are obligatory situations for consciousness, and a few assortment of those could in the end be adequate (although we don’t declare to have arrived at such a complete record but).

Third, we assume that our greatest guess for locating substantive truths about AI consciousness is what Jonathan Birch calls a theory-heavy methodology, which in our context means we proceed by investigating whether or not AI techniques carry out capabilities related to people who scientific theories affiliate with consciousness, then assigning credences based mostly on (a) the similarity of the capabilities, (b) the power of the proof for the theories in query, and (c) one’s credence in computational functionalism. The primary alternate options to this strategy are both 1) to make use of behavioural or interactive tests for consciousness, which dangers testing for the improper issues or 2) to search for markers usually related to consciousness, however this methodology has pitfalls within the synthetic case that it might not have within the case of animal consciousness.

Observe that you could settle for these assumptions as a materialist, or as a non-materialist. We aren’t addressing the arduous downside of consciousness, however slightly what Anil Seth calls the “real” problem of claiming which mechanisms of which techniques—on this case, AI techniques—are related to consciousness.

With these assumptions in hand, our interdisciplinary workforce of scientists, engineers and philosophers got down to see what we might fairly say about AI consciousness.

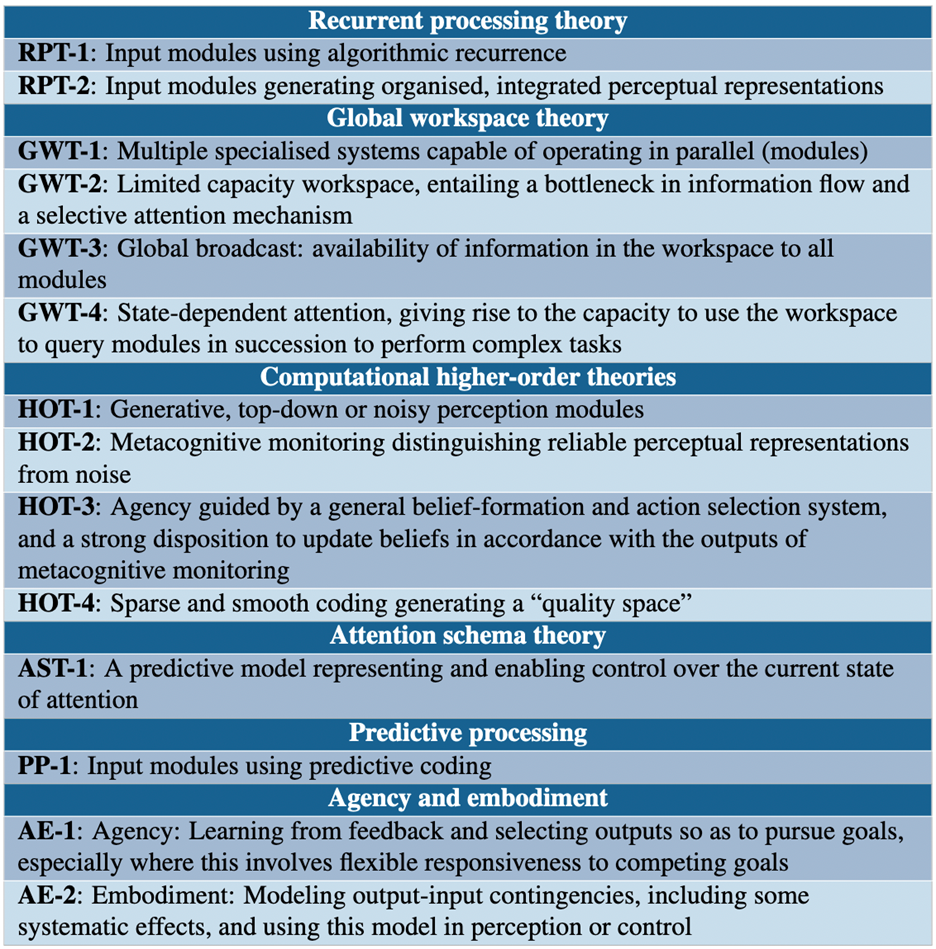

First, we made a (non-exhaustive) record of promising theories of consciousness. We determined to concentrate on 5: recurrent processing theory, global workspace theory, higher- order theories, predictive processing, and attention schema theory. We additionally focus on unlimited associative learning and ‘midbrain’ theories that emphasize sensory integration, in addition to two high-level options of techniques which are typically argued to be necessary for consciousness: embodiment and company. Once more, this isn’t an exhaustive record, however we aspired to cowl a consultant choice of promising scientific approaches to consciousness which are appropriate with computational functionalism.

We then got down to determine, for every of those, a brief record of indicator situations: standards that have to be happy by a system to be aware by the lights of that principle. Crucially, we don’t then try to resolve between all the theories. Slightly, we construct a guidelines of all the indicators from all the theories, with the concept the extra bins a given system checks, the extra assured we may be that it’s aware, and likewise, the less bins a system checks, the much less assured we needs to be that it’s aware (evaluate: the methodology in Chalmers 2023).

We used this strategy to ask two questions. First: are there any indicators that look like off-limits or inconceivable to implement in close to future AI techniques? Second, do any current AI techniques fulfill all the indicators? We reply no to each questions. Thus, we discover purpose to doubt that any at the moment current AI techniques are aware, however we additionally discover no obstacles to the existence of aware AI techniques within the close to future (although we provide no blueprints, and we don’t determine a system wherein all indicators can be collectively happy).

Within the house remaining, we’ll first summarize a pattern damaging discovering: that present giant language fashions like ChatGPT don’t fulfill all indicators, after which we’ll focus on just a few broader morals of the undertaking.

We analyze a number of up to date AI techniques: Transformer-based techniques corresponding to large language models and PerceiverIO, in addition to AdA, Palm-E, and a “virtual rodent”. Whereas a lot latest concentrate on AI consciousness has understandably centered on giant language fashions, that is overly slim. Asking whether or not AI techniques are aware is slightly like asking whether or not organisms are aware. It would very doubtless rely upon the system in query, so we should discover a spread of techniques.

Whereas we discover unchecked bins for all of those techniques, a transparent instance is our evaluation of huge language fashions based mostly on the Transformer structure like OpenAI’s GPT sequence. Specifically we assess whether or not these fashions’ residual stream would possibly quantity to a world workspace. We discover that it doesn’t, due to an equivocation in how this could go: will we consider modules as confined to specific layers? Then indicator GWT-3 (see the above desk) is unhappy. Can we consider modules as unfold out over layers? Then there isn’t any distinguishing the residual stream (i.e., the workspace) from the modules, and indicator GWT-1 is unhappy. Furthermore, both means, indicator RPT-1 is unhappy. We then assess Perceiver and PerceiverIO architectures, discovering that whereas they do higher, they nonetheless fail to fulfill indicator GWT-3.

What are the morals of the story? We discover just a few: 1) we are able to apply scientific rigor to the evaluation of AI consciousness, partially as a result of 2) we are able to determine pretty clear indicators related to main theories of consciousness, and present the best way to assess whether or not AI techniques fulfill them. And so far as substantive outcomes go, 3) we discovered preliminary proof that most of the indicator properties may be applied in AI techniques utilizing present strategies, whereas additionally discovering that 4) no present system seems to be a robust candidate for consciousness.

What concerning the ethical morals of the story? All of our co-authors care about moral points raised by machines which are, or are perceived to be, aware, and some of us have written about them. We don’t advocate constructing aware AIs, nor do we offer any new details about how one would possibly accomplish that: our outcomes are primarily outcomes of classification slightly than of engineering. On the identical time, we hope that our methodology and record of indicators contributes to extra nuanced conversations, for instance, by permitting us to extra clearly distinguish the query of synthetic consciousness from the query of synthetic basic intelligence, and to get clearer on which features or capabilities of consciousness are morally related.

Thanks for studying! You may also discover protection of our report in Science, Nature, New Scientist, the ImportAI Newsletter, and elsewhere.

[1] The total set of authors is: Patrick Butlin (Philosophy, Way forward for Humanity Institute, College of Oxford), Robert Long (Philosophy, Heart for AI Security), Eric Elmoznino (Cognitive neuroscience, Université de Montréal and MILA – Quebec AI Institute), Yoshua Bengio (Manmade Intelligence, Université de Montréal and MILA – Quebec AI Institute), Jonathan Birch (Consciousness science, Centre for Philosophy of Pure and Social Science, LSE), Axel Constant (Philosophy, College of Engineering and Informatics, The College of Sussex and Centre de Recherche en Éthique, Université de Montréal), George Deane (Philosophy, Université de Montréal), Stephen M. Fleming (Cognitive neuroscience, Division of Experimental Psychology and Wellcome Centre for Human Neuroimaging, College Faculty London) Chris Frith (Neuroscience, Wellcome Centre for Human Neuroimaging, College Faculty London and Institute of Philosophy, College of London) Xu Ji (Université de Montréal and MILA – Quebec AI Institute), Ryota Kanai (Consciousness science and AI, Araya, Inc.), Colin Klein (Philosophy, The Australian Nationwide College), Grace Lindsay (Computational neuroscience, Psychology and Heart for Knowledge Science, New York College), Matthias Michel (Consciousness science, Heart for Thoughts, Mind and Consciousness, New York College), Liad Mudrik (Consciousness science, College of Psychological Sciences and Sagol College of Neuroscience, Tel-Aviv College), Megan A. K. Peters (Cognitive science, College of California, Irvine and CIFAR Program in Mind, Thoughts and Consciousness), Eric Schwitzgebel, (Philosophy, College of California, Riverside), Jonathan Simon (Philosophy, Université de Montréal), Rufin VanRullen (Cognitive science, Centre de Recherche Cerveau et Cognition, CNRS, Université de Toulouse).

[Top image by J. Weinberg, created with the help Dall-E 2 and with apologies to Magritte]