Introduction

The Journal Surveys Project of the American Philosophical Affiliation affords an outlet for philosophers to report their experiences with journals. Authors can submit details about how lengthy a journal took to evaluation their manuscript, how good their expertise was with the editors, if and what high quality of feedback they acquired, and if the manuscript was accepted for publication. Since its creation by Andy Cullison in 2009 over 9,400 surveys have been accomplished as of April 2024.

There are drawbacks to utilizing author-reported surveys to glean insights into journal metrics as we have no idea how correct the surveys are and most journals don’t present the stats that may permit us to confirm the survey outcomes. For instance, the acceptance charge for many journals is extensively believed to be overstated within the surveys (see Jonathan Weisberg’s ‘Visualizing the Philosophy Journal Surveys’).

Nonetheless, it’s cheap to imagine the survey outcomes persistently correlate with precise journal efficiency; that the best-performing journals within the Journal Surveys are more likely to be the best-performing journals in apply. Therefore, some precious insights should come up solely from evaluating relative journal efficiency within the Journal Surveys. For a philosophical researcher who, for instance, needs to ship their manuscript to a venue that can evaluation it rapidly, whereas the Journal Surveys won’t be capable to present an correct estimate on how lengthy ‘fast’ is, they will present an inexpensive estimate on which journals might be ‘faster’ than others. As such, this report makes use of the information obtained from the Journal Surveys to analyse that are the most effective (and worst) performing journals when in comparison with each other within the following areas: response size, remark probabilities, high quality of feedback, and expertise with journal editors.

Setup

All survey responses are available and simply exported from the Journal Surveys site. That is nice for anybody wanting to make use of it as the idea for additional evaluation. Nonetheless, there are a number of points with the uncooked information, which if used to create journal-level metrics, undermine their credibility. Issues recognized embrace:

- Some surveys report that they acquired no feedback but in addition recorded a price for the standard of feedback (231 circumstances).

- Some surveys had response instances of 0 (280 circumstances).

- 7 surveys have evaluation instances of larger than 5 years (one among which was 2911 months).

- In a number of columns, ‘0’ and empty cells signify no information.

- A number of journals have entries listed underneath barely completely different names akin to:

- ‘Ergo‘, ‘Ergo an Open Entry Journal of Philosophy‘, ‘Ergo: an Open Entry Journal of Philosophy‘

- ‘Philosophers Imprint‘, ‘Philosophers‘ Imprint‘

- Word: PhilPapers’s David Bourget – who hosts the Journal Surveys website—has since deployed a number of enhancements to the Journal Surveys addressing a few of these points. This consists of:

- Merging journal duplicates and making modifications that can stop future duplicates from being created.

- Validation has been added to evaluation period that requires the worth to be larger than 0.

A few of these points are much less problematic than others and will be fastened by merely eradicating sure surveys from the general information or merging entries for journals with identify variants. For others, the affect will hinge on how journal-level metrics are calculated. For instance, the ‘Remark High quality’ column has legitimate values of ‘1, 2, 3, 4, 5’ however makes use of each ‘0’ and ‘NULL’ to signify that the survey respondent didn’t reply to remark high quality. If the journal metric is calculated over all integers, then, ‘0’ values might be incorrectly included and consequently (and incorrectly) decrease the general common.

To make sure a sturdy foundation for conducting information evaluation, the uncooked information has been cleaned by eradicating any problematic entries, merging journals, and making uniform ‘0’ and null values (and the place it’s unclear, eradicating surveys which may distort the information). The clear information for surveys accomplished earlier than March 2024 is accessible here. The easy SQL script used can also be out there here for anybody who needs to conduct related evaluation with the newest information out there.

The clear dataset incorporates the outcomes of 8,512 surveys. Nonetheless, many of those surveys are from a while in the past and only one,568 surveys (18.42%) have been submitted within the final 5 years. Whereas older information would possibly nonetheless be related to present journal operations—notably as philosophy journal editors are inclined to have lengthy tenures and journal practices change sometimes—I’ll deal with the final 5 years.

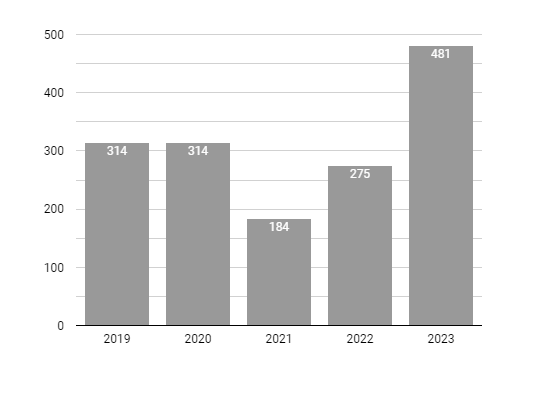

Determine 1 – Annual Survey Responses 2019-2023

Every year averaged 313.6 surveys; 2021 noticed a discount within the variety of folks submitting surveys—from 314 in 2020 all the way down to 184 in 2021—however numbers have since recovered to document highs with 481 surveys accomplished in 2023. I’d speculate that the 2021 droop was associated to an endemic virus however I’ve no technique of corroborating that declare. In any other case, the surveys appear to be extra well-liked than ever and if this pattern continues, it should make future outcomes more and more dependable.

Survey responses are unfold out amongst 97 journals, for a median of 16.2 per journal. Nonetheless, they aren’t evenly distributed, and a small variety of journals account for a big proportion of the general responses; the ten journals with probably the most responses account for 42% (660) of all surveys within the final 5 years. Inversely, there are a lot of journals with a small variety of surveys; 52 journals (53.6%) had lower than 10 surveys within the final 5 years. This makes any journal-level metrics unreliable for these journals with a restricted variety of surveys.

To create metrics which have a modest diploma of credibility, the main target might be restricted to journals which have probably the most surveys. Considerably arbitrarily, as solely 19 journals recorded greater than 30 accomplished surveys within the final 5 years, solely these journals will characteristic from right here on. Whereas the quantity could possibly be increased, I’m assuming that 30 surveys will result in fairly reliable outcomes. These journals are: Philosophical Research (P Research), Evaluation, Australasian Journal of Philosophy (AJP), Synthese, Ergo, Philosophical Quarterly (PQ), Philosophy and Phenomenological Analysis (PPR), Philosophers’ Imprint (Imprint), Nous, Journal of Ethics & Social Philosophy (JESP), Thoughts, Ethics, Pacific Philosophical Quarterly (PPQ), Journal of Philosophy (JoP), European Journal of Philosophy (EJP), Journal of Ethical Philosophy (JMP), Canadian Journal of Philosophy (CJP), Philosophy and Public Affairs (PPA), and the Journal of the American Philosophical Affiliation (JoAPA). These are ordered descending by the variety of surveys; the variety of surveys for every journal is depicted in Determine 2.

Determine 2 – Survey Responses by Journal 2019-2023

Lastly, it’s value noting that if the journals that obtain probably the most submissions correlate with the journals which have probably the most survey responses, then these journals are probably people who most philosophers might be fascinated about understanding the outcomes of.

Outcomes of the Journal Surveys: Response Size

Survey respondents are requested in regards to the ‘Preliminary verdict evaluation time’ for his or her submitted manuscript. The reply is recorded within the variety of months the place decimals could also be used to signify partial months. Throughout all surveys, the typical time for journals to return the preliminary verdict was 3.22 months. 866 surveys (55.3%) reported a response time of lower than 3 months, 468 (29.8%) reported between 3-6 months and 234 (14.9%) reported longer than 6 months. Particular person journal averages are depicted in Determine 3.

Determine 3 – Common Response Time by Journal (in months)

Journals that recorded a notably decrease common than the general survey common embrace Ergo with a median time of 1.47 months, Evaluation with 1.75 months, and Philosophy and Public Affairs with 1.75 months. Whereas one would possibly count on response instances for Evaluation to be faster as a result of journal solely publishing papers with 4,000 phrases or much less, Ergo has the bottom general common. Furthermore, Ergo and Philosophy and Public Affairs are the one two journals of the 19 the place no survey reported a response taking longer than 6 months.

Journals that recorded a notably increased common than the general survey common embrace Pacific Philosophical Quarterly with a median time of seven.05 months and the Journal of Philosophy with 5.55 months. Pacific Philosophical Quarterly’s excessive common is basically resulting from 2021 the place their common climbed to 9.77 months. It has since come all the way down to 4.6 months in 2023 which though drastically improved, would nonetheless put them as one of many worst performers. The Journal of Philosophy’s response instances are persistently on the lengthier aspect with annually’s common topping 5 months.

Outcomes of the Journal Surveys: Remark Possibilities

Survey respondents are requested in regards to the ‘Units of reviewer feedback initially offered’ the place the alternatives of response are: ‘0’, ‘1’, ‘2’, ‘3’, ‘4’ or ‘5’. The outcomes of this query can be utilized to find out what quantity of surveys acquired feedback from the journal on their submitted manuscript. Throughout all surveys, 67% of surveys reported receiving 1 or extra units of reviewer feedback. Particular person journal outcomes are depicted in Determine 4.

Determine 4 – % of Manuscripts Receiving Feedback by Journal

Journals that recorded a notably increased outcome than the general survey outcome embrace Synthese with 99% of manuscripts returned to authors with feedback, Journal of the American Philosophical Affiliation with 90%, the Australasian Journal of Philosophy with 89%, and Evaluation with 85%.

Journals that recorded a notably decrease outcome than the general survey outcome embrace Philosophy and Phenomenological Analysis with 26% of manuscripts returned to authors with feedback, the Journal of Ethical Philosophy with 28%, Pacific Philosophy Quarterly with 31%, and Philosophers’ Imprint with 31%.

One would possibly count on evaluation instances to correlate with remark % as extra papers being despatched out ought to enhance the typical evaluation time and inversely extra papers being desk rejected ought to lower common evaluation instances. This considering holds for some journals; Synthese’s common evaluation time is on the lengthier aspect, however they supply feedback to 99% of manuscripts, Philosophers’ Imprint’s common evaluation time is on the shorter aspect, however they solely present feedback to 31% of manuscripts. Nonetheless, for a lot of journals, components past the proportion of papers despatched for exterior evaluation have to be at play; journals with fast evaluation instances and excessive remark % embrace Ergo, Journal of the American Philosophical Affiliation, Evaluation, and Philosophical Quarterly.

Outcomes of the Journal Surveys: Remark High quality

Earlier than diving into particular person journal outcomes for remark high quality, it’s value noting that as many journals solely present feedback on some manuscripts, the 19 journals that have been chosen as the main target as a result of they’ve probably the most accomplished surveys won’t be these with probably the most values for remark high quality. The variety of surveys with a price for remark high quality for every journal is depicted in Determine 5. It exhibits that almost all have had a major drop within the variety of surveys with values for remark high quality, and this may detrimentally affect the reliability of these journal averages. To stay constant, the identical 19 journals might be in contrast however for these on the decrease aspect, warning is advisable.

Determine 5 – Surveys with solutions to ‘Remark High quality’ by Journal

Survey respondents are requested in regards to the ‘High quality of reviewer feedback’ offered on their submitted manuscript. The alternatives of response are: ‘1 (Very poor)’, ‘2 (Poor)’, ‘3 (OK)’, ‘4 (Good)’, and ‘5 (Wonderful)’. Throughout all surveys, the typical rating for remark high quality was 3.44 out of 5. Particular person journal averages are depicted in Determine 6.

Determine 6 – Common Remark High quality (out of 5) by Journal

Journals that recorded notably increased averages than the general survey common (and every had larger than 60 responses to the remark high quality query) embrace, Synthese with a median rating of three.72 out of 5 and Evaluation with 3.52. Journals that recorded notably decrease averages than the general survey common (and every had larger than 60 responses to the remark high quality query) embrace the Australasian Journal of Philosophy with a median of three.17 out of 5 and Philosophical Research with 3.18. Whereas Philosophers’ Imprint, Pacific Philosophical Quarterly, and Philosophy and Phenomenological Analysis carried out poorly, they have been additionally amongst these with the fewest surveys accomplished with 15, 11, and 14 respectively.

Outcomes of the Journal Surveys: Expertise with Editors

Survey respondents are requested about their ‘Total expertise with editors’. The alternatives of response are: ‘1 (Very poor)’, ‘2 (Poor)’, ‘3 (OK)’, ‘4 (Good)’, and ‘5 (Wonderful)’. Throughout all surveys, the typical rating for expertise with editors was 3.51 out of 5. Particular person journal averages are depicted in Determine 7.

Determine 7 – Common Expertise with Editors (out of 5) by Journal

Journals that recorded a notably increased common than the general survey common embrace Synthese with a median rating of 4.24 out of 5, Evaluation with 4.01, and the Journal of the American Philosophical Affiliation with 4.

Many journals recorded a decrease common than the general survey common; from Philosophers’ Imprint at 3.21 all the way down to Ethics at 2.94, however Pacific Philosophy Quarterly stands out with a median rating of two.03. In contrast to response instances, the place one 12 months had a large affect on the journal’s general efficiency, Pacific Philosophy Quarterly is persistently one of many worst performers annually.

Conclusion

As I said firstly, all one can hope to discern from this report with any diploma of credibility are comparisons between varied journals. For the 19 journals in contrast, one can take a look at particular person journal efficiency and draw out conclusions about the place a manuscript is more likely to be reviewed faster than elsewhere, the place a manuscript is extra more likely to be returned with feedback, the place these feedback are more likely to be of upper high quality than elsewhere, or the place their expertise with the journal’s editors is more likely to be extra nice than elsewhere. To make assessing relative journal efficiency simpler, the outcomes contained inside this report have been positioned into an interactive table here.

There are numerous methods the evaluation on this report could possibly be improved. The only one is rising the pattern dimension used to create journal averages. Anybody is free to submit their expertise with a journal and doing so will each enhance the accuracy of journal averages and permit for brand spanking new journals to be included in future reviews of this sort.

Nonetheless, if we need to have an understanding of journal operations past their relative efficiency when in comparison with different journals (i.e., to understand how lengthy a evaluation is more likely to take with any given journal) one other method is required. The obvious of those could be for extra journals to offer this info, both on their web site or as a part of one other survey undertaking (the Philosophy Journal Perception Challenge might be working this type of survey in the summertime of 2024).

Sam Andrews

Sam Andrews is a current PhD graduate from the College of Birmingham who focuses on Metaphysics, Epistemology, and the Philosophy of Science. He’s the director of the Philosophy Journal Insight Project, a undertaking that goals to enhance the transparency of journal practices and operations.